- 미디어파이프 설치 : !pip install mediapipe opencv-python

import cv2

import mediapipe as mp

import numpy as np

mp_drawing = mp.solutions.drawing_utils

mp_pose = mp.solutions.pose

# 웹캠실행

cap = cv2.VideoCapture(0)

while cap.isOpened():

ret, frame = cap.read()

cv2.imshow('Mediapipe Feed', frame)

if cv2.waitKey(10) & 0xFF == 27: # esc 키를 누르면 닫음

break

cap.release()

cv2.destroyAllWindows()- 기본 웹캠

1. 감지

cap = cv2.VideoCapture(0)

## 미디어 파이프 instance 결정

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

while cap.isOpened():

ret, frame = cap.read()

# 이미지를 다시 RGB형식으로 칠함 (먼저는 프레임을 잡아줘야한다)

image = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

image.flags.writeable = False # 이미지 다시쓰기

# 탐지하기

results = pose.process(image)

# 이미지를 RGB로 나타냄

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# Render detections

mp_drawing.draw_landmarks(image, results.pose_landmarks, mp_pose.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(color=(245,117,66), thickness=2, circle_radius=2),

mp_drawing.DrawingSpec(color=(245,66,230), thickness=2, circle_radius=2)

)

cv2.imshow('Mediapipe Feed', image)

if cv2.waitKey(10) & 0xFF == 27:

break

cap.release()

cv2.destroyAllWindows()- 사람을 인식하고 색있는 바로 표시한다

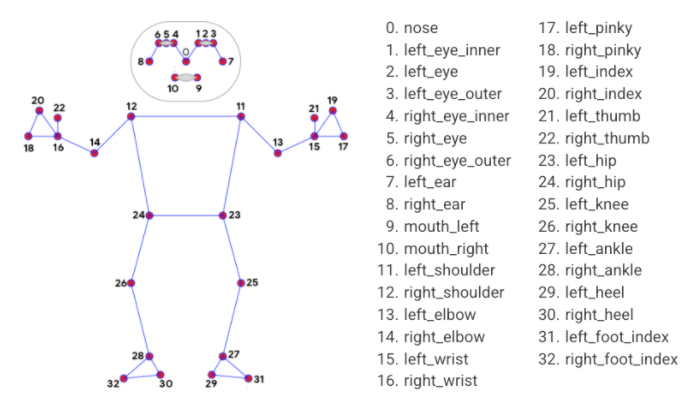

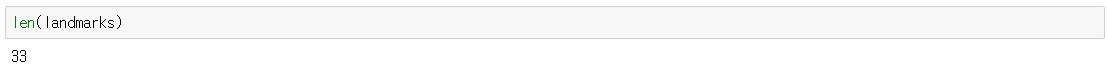

2. 관절 결정

cap = cv2.VideoCapture(0)

## Setup mediapipe instance

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

while cap.isOpened():

ret, frame = cap.read()

# Recolor image to RGB

image = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

image.flags.writeable = False

# Make detection

results = pose.process(image)

# Recolor back to BGR

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# Extract landmarks

try:

landmarks = results.pose_landmarks.landmark

print(landmarks)

except:

pass

# Render detections

mp_drawing.draw_landmarks(image, results.pose_landmarks, mp_pose.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(color=(245,117,66), thickness=2, circle_radius=2),

mp_drawing.DrawingSpec(color=(245,66,230), thickness=2, circle_radius=2)

)

cv2.imshow('Mediapipe Feed', image)

if cv2.waitKey(10) & 0xFF == 27:

break

cap.release()

cv2.destroyAllWindows()- 관절을 결정하고 나눈 것을 저장한다

3. 각도 계산

def calculate_angle(a,b,c):

a = np.array(a) # First

b = np.array(b) # Mid

c = np.array(c) # End

radians = np.arctan2(c[1]-b[1], c[0]-b[0]) - np.arctan2(a[1]-b[1], a[0]-b[0])

angle = np.abs(radians*180.0/np.pi)

if angle >180.0:

angle = 360-angle

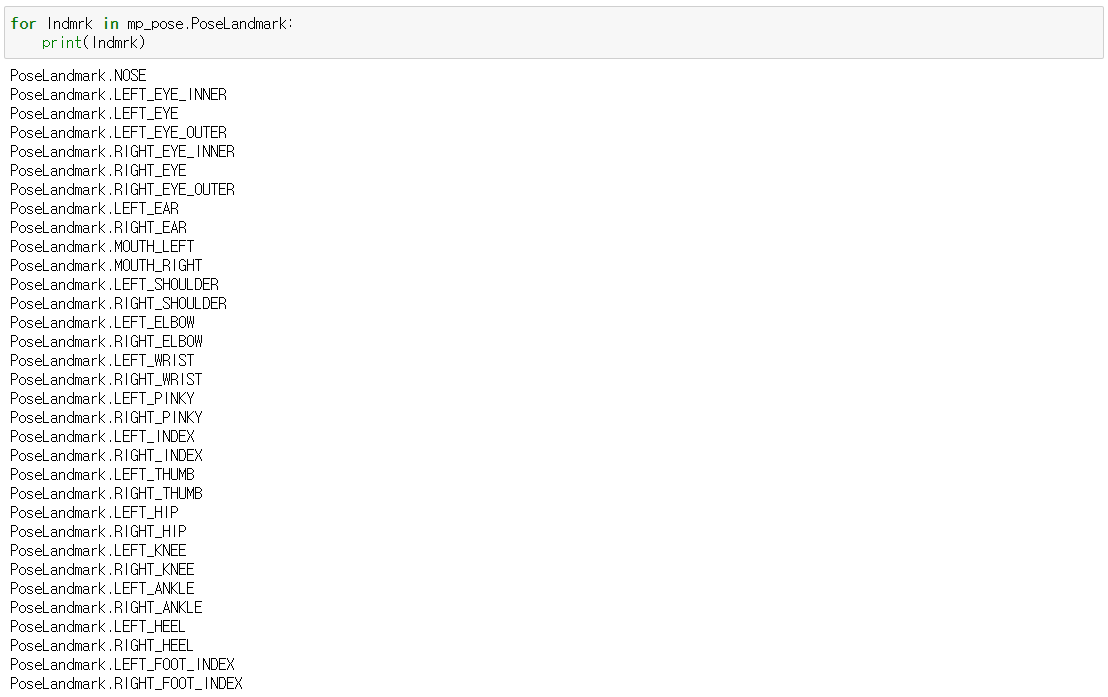

return angleshoulder = [landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].x,landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].y]

elbow = [landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].x,landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].y]

wrist = [landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].x,landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].y]shoulder, elbow, wrist

calculate_angle(shoulder, elbow, wrist)

tuple(np.multiply(elbow, [640, 480]).astype(int))

cap = cv2.VideoCapture(0)

## Setup mediapipe instance

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

while cap.isOpened():

ret, frame = cap.read()

# Recolor image to RGB

image = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

image.flags.writeable = False

# Make detection

results = pose.process(image)

# Recolor back to BGR

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# Extract landmarks

try:

landmarks = results.pose_landmarks.landmark

# Get coordinates

shoulder = [landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].x,landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].y]

elbow = [landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].x,landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].y]

wrist = [landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].x,landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].y]

# Calculate angle

angle = calculate_angle(shoulder, elbow, wrist)

# Visualize angle

cv2.putText(image, str(angle),

tuple(np.multiply(elbow, [640, 480]).astype(int)),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 2, cv2.LINE_AA

)

except:

pass

# Render detections

mp_drawing.draw_landmarks(image, results.pose_landmarks, mp_pose.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(color=(245,117,66), thickness=2, circle_radius=2),

mp_drawing.DrawingSpec(color=(245,66,230), thickness=2, circle_radius=2)

)

cv2.imshow('Mediapipe Feed', image)

if cv2.waitKey(10) & 0xFF == 27:

break

cap.release()

cv2.destroyAllWindows()- 각도를 계산한다

4. 동작 카운트

cap = cv2.VideoCapture(0)

# Curl counter variables

counter = 0

stage = None

## Setup mediapipe instance

with mp_pose.Pose(min_detection_confidence=0.5, min_tracking_confidence=0.5) as pose:

while cap.isOpened():

ret, frame = cap.read()

# Recolor image to RGB

image = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

image.flags.writeable = False

# Make detection

results = pose.process(image)

# Recolor back to BGR

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# Extract landmarks

try:

landmarks = results.pose_landmarks.landmark

# Get coordinates

shoulder = [landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].x,landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value].y]

elbow = [landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].x,landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value].y]

wrist = [landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].x,landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value].y]

# Calculate angle

angle = calculate_angle(shoulder, elbow, wrist)

# Visualize angle

cv2.putText(image, str(angle),

tuple(np.multiply(elbow, [640, 480]).astype(int)),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 2, cv2.LINE_AA

)

# Curl counter logic

if angle > 160:

stage = "down"

if angle < 30 and stage =='down':

stage="up"

counter +=1

print(counter)

except:

pass

# Render curl counter

# Setup status box

cv2.rectangle(image, (0,0), (225,73), (245,117,16), -1)

# Rep data

cv2.putText(image, 'REPS', (15,12),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0,0,0), 1, cv2.LINE_AA)

cv2.putText(image, str(counter),

(10,60),

cv2.FONT_HERSHEY_SIMPLEX, 2, (255,255,255), 2, cv2.LINE_AA)

# Stage data

cv2.putText(image, 'STAGE', (65,12),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0,0,0), 1, cv2.LINE_AA)

cv2.putText(image, stage,

(60,60),

cv2.FONT_HERSHEY_SIMPLEX, 2, (255,255,255), 2, cv2.LINE_AA)

# Render detections

mp_drawing.draw_landmarks(image, results.pose_landmarks, mp_pose.POSE_CONNECTIONS,

mp_drawing.DrawingSpec(color=(245,117,66), thickness=2, circle_radius=2),

mp_drawing.DrawingSpec(color=(245,66,230), thickness=2, circle_radius=2)

)

cv2.imshow('Mediapipe Feed', image)

if cv2.waitKey(10) & 0xFF == 27:

break

cap.release()

cv2.destroyAllWindows()- 계산한 각도에 따라 변화하는 동작마다 카운트 한다

'제작 도전' 카테고리의 다른 글

| 파이썬으로 초간단 웹캠 실행기 (0) | 2021.07.27 |

|---|---|

| 네이버 금융 크롤링 (주식 인기검색종목) (0) | 2021.05.12 |

| 네이버 금융 크롤링 (주식 테마별 시세) (0) | 2021.05.12 |

| 다음 뉴스 크롤링 제작 (0) | 2021.04.30 |